Reducing Behind Live Latency¶

Introduction¶

One of the main benefits of HTTP based streaming and the reason why it has become widely adopted as the mechanism to "broadcast" media over the Internet is that it is based on standard web server / browser based protocols. Standard web servers and proxy cache servers can be used to transport the media to the end-user. Instead of broadcasting a feed of video and audio, the feed is divided into files (chunks) that can be stored on a web server (origin) and downloaded with HTTP. The chunks can also be cached on proxy servers to scale both in traffic load and region reach. The downloaded chunks are concatenated in the video player and played back. This is the fundamental principle of HTTP based streaming.

In the beginning it was normal to have a chunk length of about 9-10 seconds of video and the video players were recommended to download at least three chunks and then start playing the first one downloaded. This would give the video player a buffer of at least 30 seconds to handle the case when one chunk took a long time to download without causing any interruptions. As a side effect of this the time between when an event takes place and when an end-user see the event on their screen is around 30 seconds (called behind live latency). Reducing this latency is essential for some applications when timing is critical. It can be in sports betting or sports in general, when a lower behind live latency is part of the overall quality of experience.

The industry has acknowledged this problem and there are a number of revisions to the streaming standards with solutions to address this problem. Red Bee Media and OTT monitors this development closely and is actively involved in the implementation of the standards. It is still a standard under development.

Use Cases¶

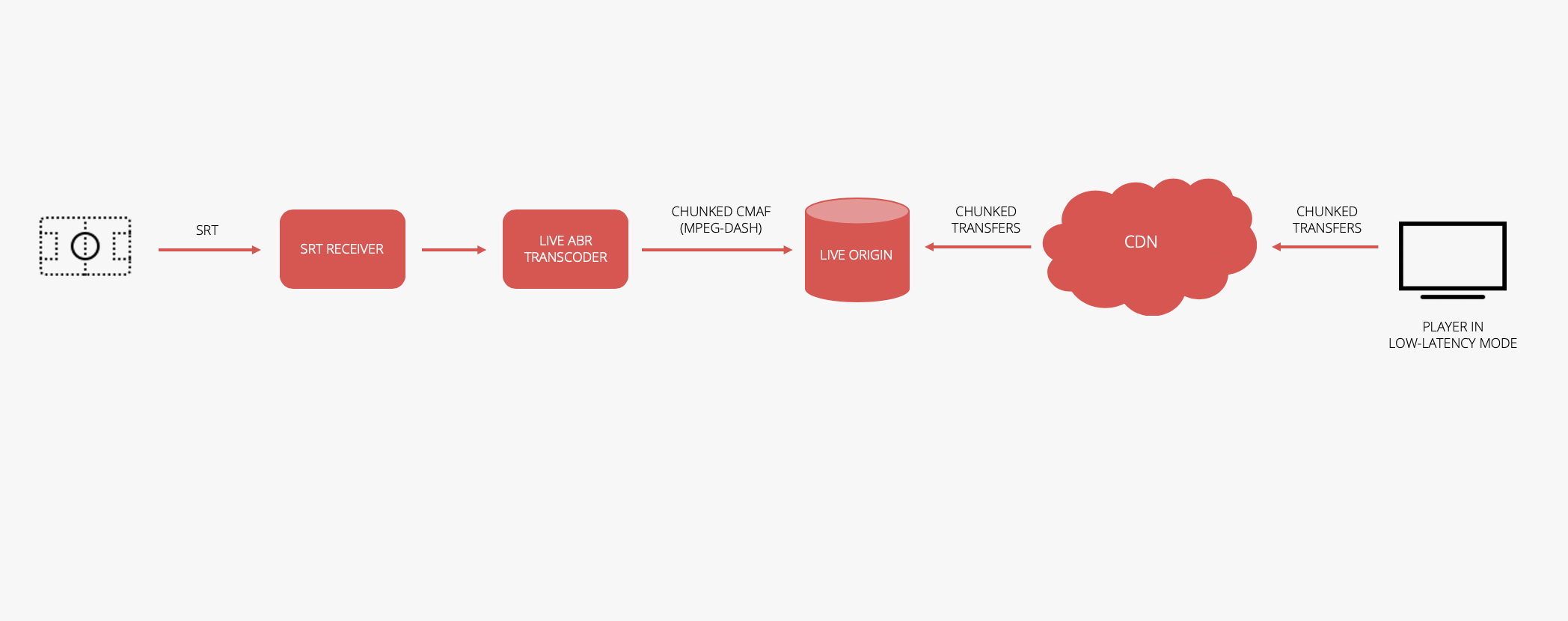

A sports event sponsored by a major betting company wants to make the event available for the users on their betting site. The behind live latency for the viewers online directly determines the size of the betting window and it is of financial benefit to reduce the latency and be able to have a shorter window. To reduce the latency and shorten the window the OB production at the event transport the video feed using the low-latency contribution protocol SRT to the Managed OTT platform. The SRT receiver on the OTT side is directly fed into the Live ABR transcoders. The Live ABR transcoders creates chunks and manifests based on the low-latency variant of MPEG-DASH that is pushed to an origin and CDN. The CDN and video players implements a technology called chunked transfers which means that a file can be downloaded while it is growing on the server. This in combination with player algorithms that starts processing the video chunks before they are fully downloaded makes it possible to reduce the behind live latency down to 3.5 seconds.

Configuration¶

To enable and reserve a resource for low latency mode the 24/7 operations team needs to be contacted. An SRT receiver will be setup and IP and port will be provided together with recommended settings for the SRT sender. Live events can be scheduled and created using this resource and end-users using one of the supported platforms will automatically get a video player set for low latency playback. For end-users with players that does not support low latency playback the same streams will be used but played back with standard behind live latency.

How-to Guides¶

- Configure the SRT transmitter (Haivision encoder, vMix etc) with the recommended settings provided by the OTT operations team.

- Setup a low latency resource (channel)

- Create a live event on this resource where you specify start, end and availability of the event. The same process when creating a standard live event.

- Start streaming from the SRT transmitter

- Verify in the Customer Portal that the event is playable by going to the channel view for this resource. Note: In the Customer Portal a standard player is used so it will not be in low latency mode.

The low latency stream does not have catchup enabled by default and to enable that a new resource dedicated for this needs to be reserved. This is something that will be improved in later increments of the product.

Data Sheet¶

| Contribution Protocol | SRT |

| Video: AVC/HEVC | |

| Audio: AAC | |

| Streaming Protocol | MPEG-DASH and CMAF Low Latency Mode (chunked CMAF) |

| Video: AVC/HEVC | |

| Audio: AAC | |

| Supported Platforms | Web (Chrome, Firefox, Edge) |

| Android (Roadmap) | |

| iOS / tvOS (Roadmap - subject to Apple roadmap) | |

| Behind Live Latency | Low Latency Mode: 3,5 seconds |

| Normal Mode: 20 seconds |

Technology¶

The audio and video processing pipeline for Low Latency mode differs from the standard processing pipeline. Mainly because the standards around low latency are still unstable and there are use cases where standard behind live latency is sufficient. There are requirements put both on the feed contribution side, transcoding side and on the player side.

The contribution transport protocol used is SRT instead of RTMP. The live ABR transcoder will create MPEG-DASH manifests using SegmentTemplate instead of SegmentList. Using SegmentTemplate to address the filenames of the media chunks reduces the frequency that the video player needs to fetch new MPEG-DASH manifests. The media chunks the transcoder creates are CMAF sub-chunks. That means that a chunk contains addressable parts which means that the player can start decoding the video before the entire chunk is downloaded. The chunks are placed on an origin that has a web server supporting HTTP 1.1 chunked transfers. This means that a client can start download a chunk while it is growing on disk. The CDN between the origin and the video player must also support this functionality. The player is set to run in low latency mode which means that it will start decoding a chunk while it is downloading and try to be as close to the live edge as possible. If the player falls behind it will subtly increase the playback speed to get back to the live edge.